| << Chapter < Page | Chapter >> Page > |

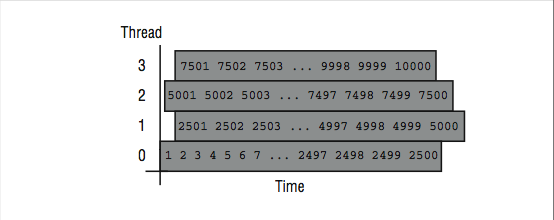

These two example loops can show how these iteration scheduling approaches might operate when executing with four threads. In the vector-add loop, static scheduling would distribute iterations 1–2500 to Thread 0, 2501–5000 to Thread 1, 5001–7500 to Thread 2, and 7501–10000 to Thread 3. In [link] , the mapping of iterations to threads is shown for the static scheduling option.

Since the loop body (a single statement) is short with a consistent execution time, static scheduling should result in roughly the same amount of overall work (and time if you assume a dedicated CPU for each thread) assigned to each thread per loop execution.

An advantage of static scheduling may occur if the entire loop is executed repeatedly. If the same iterations are assigned to the same threads that happen to be running on the same processors, the cache might actually contain the values for

A ,

B , and

C from the previous loop execution.

The operating system and runtime library actually go to some lengths to try to make this happen. This is another reason not to have more threads than available processors, which causes unnecessary context switching. The runtime pseudo-code for static scheduling in the first loop might look as follows:

C VECTOR ADD - Static Scheduled

ISTART = (THREAD_NUMBER * 2500 ) + 1IEND = ISTART + 2499

DO ILOCAL = ISTART,IENDA(ILOCAL) = B(ILOCAL) + C(ILOCAL)

ENDDO

It’s not always a good strategy to use the static approach of giving a fixed number of iterations to each thread. If this is used in the second loop example, long and varying iteration times would result in poor load balancing. A better approach is to have each processor simply get the next value for

IPROB each time at the top of the loop.

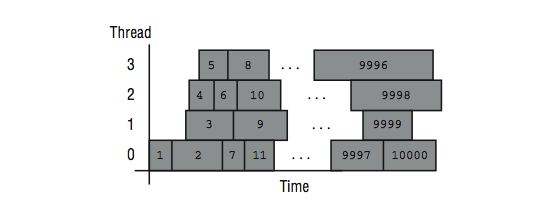

That approach is called dynamic scheduling , and it can adapt to widely varying iteration times. In [link] , the mapping of iterations to processors using dynamic scheduling is shown. As soon as a processor finishes one iteration, it processes the next available iteration in order.

Iteration assignment in dynamic scheduling

If a loop is executed repeatedly, the assignment of iterations to threads may vary due to subtle timing issues that affect threads. The pseudo-code for the dynamic scheduled loop at runtime is as follows:

C PARTICLE TRACKING - Dynamic Scheduled

IPROB = 0WHILE (IPROB<= 10000 )

BEGIN_CRITICAL_SECTIONIPROB = IPROB + 1

ILOCAL = IPROBEND_CRITICAL_SECTION

RANVAL = RAND(ILOCAL)CALL ITERATE_ENERGY(RANVAL)

ENDWHILE

ILOCAL is used so that each thread knows which iteration is currently processing. The

IPROB value is altered by the next thread executing the critical section.

While the dynamic iteration scheduling approach works well for this particular loop, there is a significant negative performance impact if the programmer were to use the wrong approach for a loop. For example, if the dynamic approach were used for the vector-add loop, the time to process the critical section to determine which iteration to process may be larger than the time to actually process the iteration. Furthermore, any cache affinity of the data would be effectively lost because of the virtually random assignment of iterations to processors.

In between these two approaches are a wide variety of techniques that operate on a chunk of iterations. In some techniques the chunk size is fixed, and in others it varies during the execution of the loop. In this approach, a chunk of iterations are grabbed each time the critical section is executed. This reduces the scheduling overhead, but can have problems in producing a balanced execution time for each processor. The runtime is modified as follows to perform the particle tracking loop example using a chunk size of 100:

IPROB = 1

CHUNKSIZE = 100WHILE (IPROB<= 10000 )

BEGIN_CRITICAL_SECTIONISTART = IPROB

IPROB = IPROB + CHUNKSIZEEND_CRITICAL_SECTION

DO ILOCAL = ISTART,ISTART+CHUNKSIZE-1RANVAL = RAND(ILOCAL)

CALL ITERATE_ENERGY(RANVAL)ENDDO

ENDWHILE

The choice of chunk size is a compromise between overhead and termination imbalance. Typically the programmer must get involved through directives in order to control chunk size.

Part of the challenge of iteration distribution is to balance the cost (or existence) of the critical section against the amount of work done per invocation of the critical section. In the ideal world, the critical section would be free, and all scheduling would be done dynamically. Parallel/vector supercomputers with hardware assistance for load balancing can nearly achieve the ideal using dynamic approaches with relatively small chunk size.

Because the choice of loop iteration approach is so important, the compiler relies on directives from the programmer to specify which approach to use. The following example shows how we can request the proper iteration scheduling for our loops:

C VECTOR ADD

C$OMP PARALLEL DO PRIVATE(IPROB) SHARED(A,B,C) SCHEDULE(STATIC)DO IPROB=1,10000

A(IPROB) = B(IPROB) + C(IPROB)ENDDO

C$OMP END PARALLEL DOC PARTICLE TRACKING

C$OMP PARALLEL DO PRIVATE(IPROB,RANVAL) SCHEDULE(DYNAMIC)DO IPROB=1,10000

RANVAL = RAND(IPROB)CALL ITERATE_ENERGY(RANVAL)

ENDDOC$OMP END PARALLEL DO

Notification Switch

Would you like to follow the 'High performance computing' conversation and receive update notifications?