| << Chapter < Page | Chapter >> Page > |

C$OMP PARALLEL DO SHARED(A,B) PRIVATE(I,TMP1,TMP2)

DO I=1,1000000TMP1 = ( A(I) ** 2 ) + ( B(I) ** 2 )

TMP2 = SQRT(TMP1)B(I) = TMP2

ENDDOC$OMP END PARALLEL DO

The iteration variable I also must be a thread-private variable. As the different threads increment their way through their particular subset of the arrays, they don’t want to be modifying a global value for I.

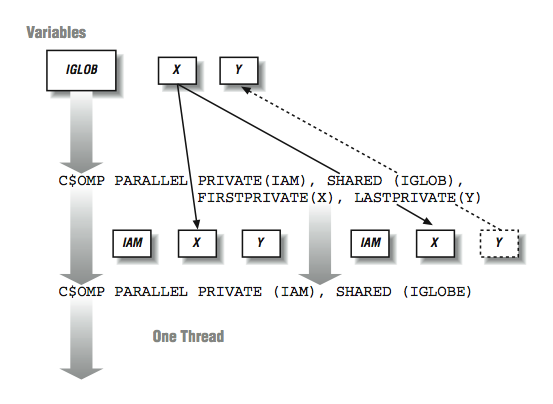

There are a number of other options as to how data will be operated on across the threads. This summarizes some of the other data semantics available:

Each vendor may have different terms to indicate these data semantics, but most support all of these common semantics. [link] shows how the different types of data semantics operate.

Now that we have the data environment set up for the loop, the only remaining problem that must be solved is which threads will perform which iterations. It turns out that this is not a trivial task, and a wrong choice can have a significant negative impact on our overall performance.

There are two basic techniques (along with a few variations) for dividing the iterations in a loop between threads. We can look at two extreme examples to get an idea of how this works:

C VECTOR ADD

DO IPROB=1,10000A(IPROB) = B(IPROB) + C(IPROB)

ENDDOC PARTICLE TRACKINGDO IPROB=1,10000

RANVAL = RAND(IPROB)CALL ITERATE_ENERGY(RANVAL) ENDDO

ENDDO

In both loops, all the computations are independent, so if there were 10,000 processors, each processor could execute a single iteration. In the vector-add example, each iteration would be relatively short, and the execution time would be relatively constant from iteration to iteration. In the particle tracking example, each iteration chooses a random number for an initial particle position and iterates to find the minimum energy. Each iteration takes a relatively long time to complete, and there will be a wide variation of completion times from iteration to iteration.

These two examples are effectively the ends of a continuous spectrum of the iteration scheduling challenges facing the FORTRAN parallel runtime environment:

Notification Switch

Would you like to follow the 'High performance computing' conversation and receive update notifications?