| << Chapter < Page | Chapter >> Page > |

Say that stationary zero-mean Gaussian source has autocorrelation , , and for . For a bit rate of R bits per sample, uniformly-quantized PCM implies a mean-squared reconstruction error of

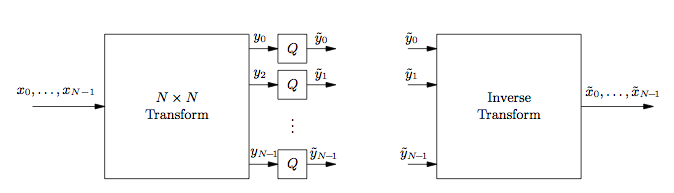

For transform coding, say we choose linear transform

Setting and , we find that the transformed coefficients have variance

and using uniformly-quantized PCM on each coefficient we get mean-squared reconstruction errors

We use the same quantizer performance factor

γ

x as before

since linear operations preserve Gaussianity.

For orthogonal matrices

T , i.e.,

,

we can show that the mean-squared reconstruction error

σ

r

2 equals the mean-squared quantization error:

Since our matrix is indeed orthogonal, we have mean-squared reconstruction error

at bit rate of bits per two samples. Comparing TC to PCM at equal bit rates (i.e. ),

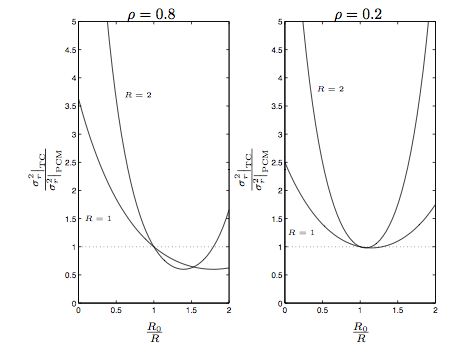

[link] shows that (i) allocating a higher bit rate to the quantizer with stronger input signal can reduce the average reconstruction errorrelative to PCM, and (ii) the gain over PCM is higher when the input signal exhibits stronger correlation ρ . Also note that when , there is no gain over PCM—a verification of the fact that when T is orthogonal.

Notification Switch

Would you like to follow the 'An introduction to source-coding: quantization, dpcm, transform coding, and sub-band coding' conversation and receive update notifications?