| << Chapter < Page | Chapter >> Page > |

Since the entropy defines the smallest number of bits that can be used to encode a source, it can be used to definethe efficiency of a code

Thus the efficiency of the naive code [link] is while the efficiency of the variable rate code [link] is 1. Shannon's source coding theorem says that ifan independent source has entropy , then there exists a prefix code in which the averagenumber of bits per symbol is between and . Moreover, there is no uniquely decodable codethat has smaller average length. Thus, if symbols (each with entropy ) are compressed into less than bits, information is lost, while information need not be lost if bits are used. Shannon has defined the goal towardswhich all codes aspire, but provides no way of finding good codes for any particular case.

Fortunately, D. A. Huffman proposed an organized procedure to build variable-length codes that are as efficient as possible.Given a set of symbols and their probabilities, the procedure is as follows:

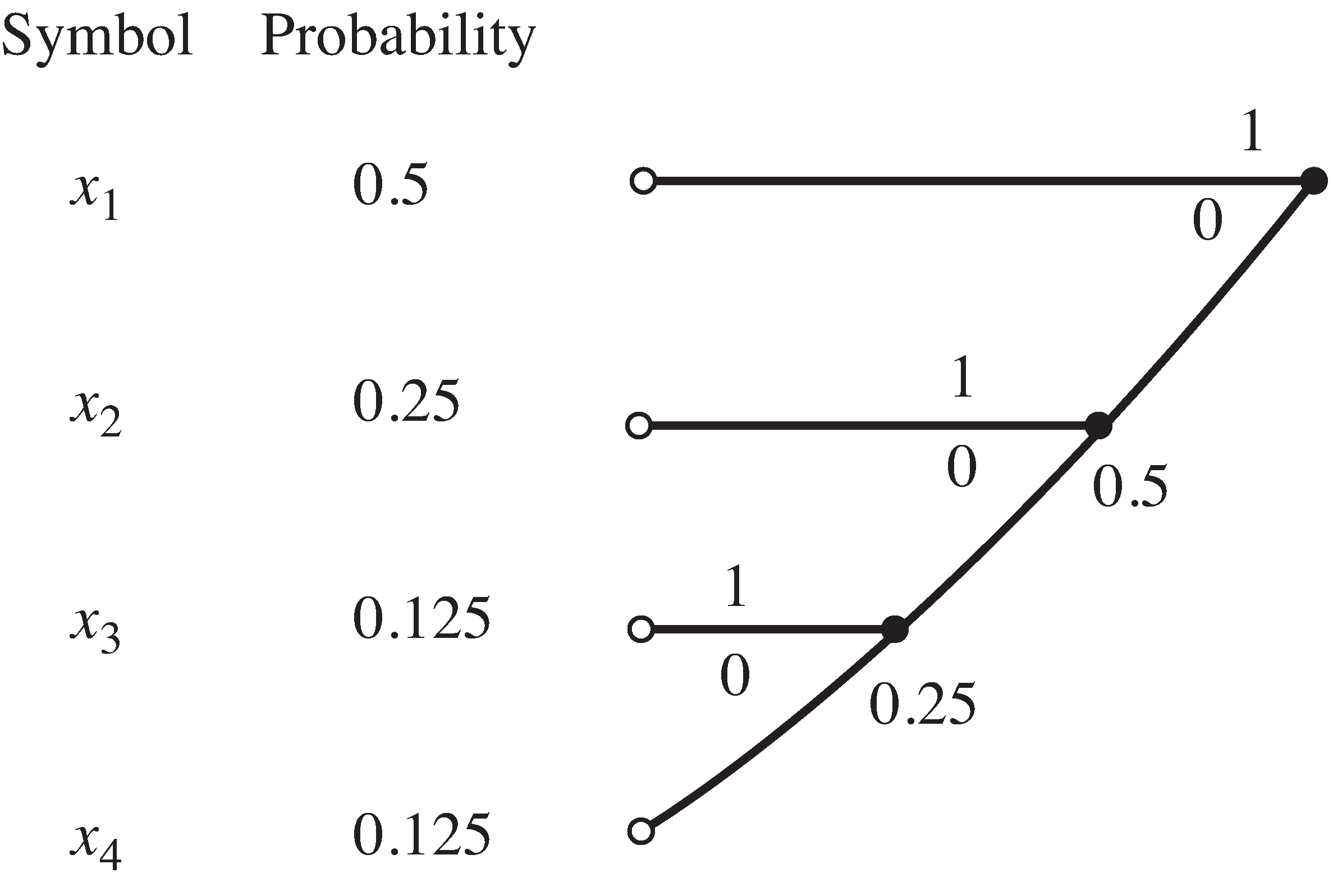

This procedure is probably easiest to understand by working through an example. Consider again the code from Example [link] (a) in which the symbols have probabilities , , and . Following the foregoing procedure leads to the chart shown in [link] . In the first step, and are combined to form a new node with probability equal to (the sum ). Then this new node is combined with to form a new node with probability . Finally, this is combined with to form the rightmost node. Each branch is now labelled. The convention used in [link] is to place a 1 on the top and a 0 on the bottom (assigning the binary digits in another order justrelabels the code). The Huffman code for this source can be read from the chart. Reading from the right-hand side, corresponds to 1, corresponds 01, to 001 and to 000. This is the same code as in [link] .

The Huffman procedure with a consistent branch labelling convention always leads to a prefix code because all the symbols end the same (except for the maximal length symbol ). More importantly, it always leads to a code that has average lengthvery near the optimal.

Consider the source with symbols with probabilities , , , , and .

Notification Switch

Would you like to follow the 'Software receiver design' conversation and receive update notifications?