| << Chapter < Page | Chapter >> Page > |

We used the Pattern Recognition tool in MATLAB R2014a (nprtool) to generate a neural network for our feature and target matrices. [3] The work flow for the general neural network design process has seven primary steps:

We used the MATLAB defaults for weights and biases, and tested multiple numbers of hidden layers. 20 hidden layers gave us the best testing results.

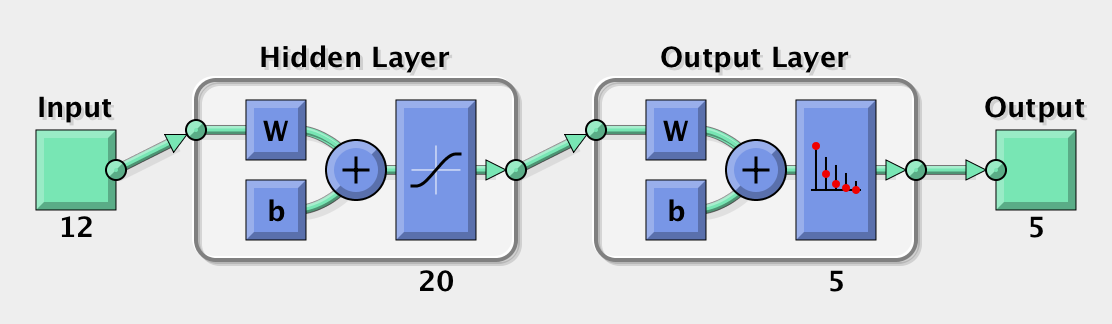

Figure 2. General neuron model.

The neurons then use a differentiable transfer function f to generate their output. Tangent sigmoid output neurons are generally used for pattern recognition problems. Since our neural network had a hidden layer of 20 neurons, a tan-sigmoid transfer function was used for the hidden neurons.

Figure 3. Our output neural network.

This type of neural network architecture is called a feedforward network. They often have hidden layers of sigmoid neurons followed by an output layer of linear neurons. We train using a method called back-propagation. Back-propagation is commonly used in image processing applications because the problem is extremely complex (many features) but has a clear solution (certain number of classes).

Back-propagation works in small iterative steps. The input matrix is applied to the network, and the network produces an initial output based on the current state of it's synaptic weights (before training the output will be random since these weights are random). This output is compared to the target matrix, and a mean-squared error signal is calculated. [5]

The error value is propagated backwards through the network and small changes are made to the weights in each layer to reduce this error. The whole process is repeated for each training image (9 for each class), then back to the first case. This process is iterated until the error no longer changes.

We used nprtool to automatically separate the input matrix into the training (60%), validation (20%), and test (20%) matrices. These percentages are consistent with the SVM method.

After the data is loaded, MATLAB generates a neural network with our specifications (20 hidden layers, back propagation training). We trained the network and output the confusion matrices and receiver operating characteristic (ROC) curves. The confusion matrices show the results of the neural network for the training, validation, and testing phases and the ROC curve plots the true positive rate vs. false positive rate.

Notification Switch

Would you like to follow the 'Automatic white blood cell classification using svm and neural networks' conversation and receive update notifications?