| << Chapter < Page | Chapter >> Page > |

We used these three algorithms for two interesting applications: Blind Source Separation (Cocktail Party Problem) and Hand-written Digit Recognition.

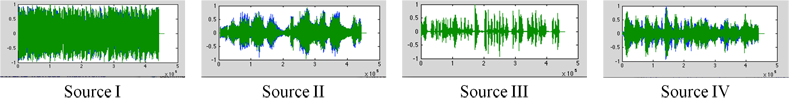

Imagine yourself at a cocktail party, and Haley is telling a boring story. You are much more interested in the gossip that Alex is telling Sam, so you tune out Alex and focus on Sam’s words. Congratulations: you have just demonstrated the human ability to solve the “cocktail party problem” — to pick out one thread of speech from the babble of two or more people. Here, we use machine learning techniques to achieve this goal. In this example, we have four different mixtures of four different sounds (CNN new material, Bach’s Symphony, Finnish Song and Pop Song Breakeven), mimicking the effect of having four microphones at four different places in a room (The number of mixtures should be equal to or greater than the number of sound sources for these algorithms to work). We feed this mixed data matrix (8 components in total, two channels for each audio file) into ICA, and the four components with the largest singular values corresponds to the four components we are looking for. The waveforms are plotted below. There’s little noise in the background, but it’s still very identifiable. PCA and NMF, however, only pick out one component.

Another extended interesting feature: If we have two different mixtures of the two channels in a song, ICA will be able to extract the background music from a song (think karaoke).

Have you ever thought about how USPS handles millions of mails each day? Obviously it will be great pain and tremendous work if postmen have to identify the postcodes people scribble manually and sort them into different bins. Their solution is to scan the handwritten addresses and utilize machine learning techniques to achieve automatic sorting.

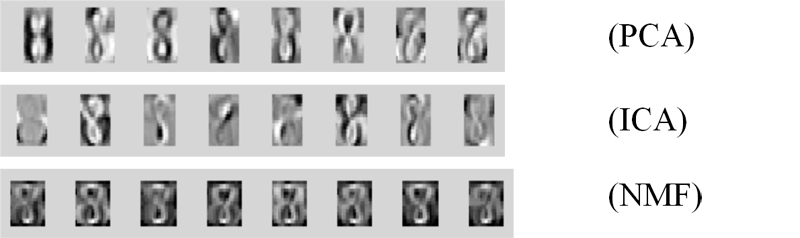

Here we ask our algorithms to learn the most significant features of digit 8. The dataset contains 542 samples. Here's some example digits in the dataset:

and the first eight components given by PCA, ICA and NMF:

The interpretation for these components is as follows: the first component captures the most significant feature of digit 8, meaning that most of the 8 in the dataset have this feature (looks like this), while the succeeding ones gives the second most significant feature etc. We see that NMF picks out the most significant features of 8 in its first component, compared to ICA and NMF. It is a little hard to characterize the result besides just looking at it. If we do have labels (supervised learning), and turn this into a classification problem in which we may have more than one digits, the result can be more accurately characterized in terms of training error/test error.

Notification Switch

Would you like to follow the 'Comparison of three different matrix factorization techniques for unsupervised machine learning' conversation and receive update notifications?