| << Chapter < Page | Chapter >> Page > |

Also, the KKT dual-complementarity conditions (which in the next section will be useful for testing for the convergence of the SMO algorithm) are:

Now, all that remains is to give an algorithm for actually solving the dual problem, which we will do in the next section.

The SMO (sequential minimal optimization) algorithm, due to John Platt, gives an efficient way of solving the dual problem arising from the derivation of the SVM. Partly to motivatethe SMO algorithm, and partly because it's interesting in its own right, let's first take another digression to talk about the coordinate ascent algorithm.

Consider trying to solve the unconstrained optimization problem

Here, we think of as just some function of the parameters 's, and for now ignore any relationship between this problem and SVMs.We've already seen two optimization algorithms, gradient ascent and Newton's method. The new algorithm we're going to consider here is called coordinate ascent :

Thus, in the innermost loop of this algorithm, we will hold all the variables except for some fixed, and reoptimize with respect to just the parameter . In the version of this method presented here, the inner-loop reoptimizes thevariables in order . (A more sophisticated version might choose other orderings; for instance, we maychoose the next variable to update according to which one we expect to allow us to make the largestincrease in .)

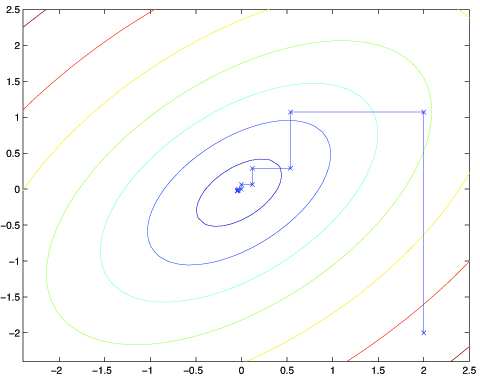

When the function happens to be of such a form that the “ ” in the inner loop can be performed efficiently, then coordinate ascent can be a fairlyefficient algorithm. Here's a picture of coordinate ascent in action:

The ellipses in the figure are the contours of a quadratic function that we want to optimize. Coordinateascent was initialized at , and also plotted in the figure is the path that it took on its way to the global maximum. Notice that on each step, coordinate ascent takes astep that's parallel to one of the axes, since only one variable is being optimized at a time.

We close off the discussion of SVMs by sketching the derivation of the SMO algorithm. Some details will be left to the homework, and for others you may refer to the paperexcerpt handed out in class.

Here's the (dual) optimization problem that we want to solve:

Let's say we have set of 's that satisfy the constraints the second two equations in [link] . Now, suppose we want to hold fixed, and take a coordinate ascent step and reoptimize the objective with respect to . Can we make any progress? The answer is no, because the constraint (last equation in [link] ) ensures that

Or, by multiplying both sides by , we equivalently have

(This step used the fact that , and hence .) Hence, is exactly determined by the other 's, and if we were to hold fixed, then we can't make any change to without violating the constraint (last equation in [link] ) in the optimization problem.

Notification Switch

Would you like to follow the 'Machine learning' conversation and receive update notifications?